I never get caught up in viral news stories on the internet. No matter how sensational. No matter how important. For reasons.

But I can’t ignore the article that went around yesterday about a researcher at Google who was put on leave after posting his conversation with an AI project of theirs named LaMDA. I got sucked into a couple threads on Facebook, and it’s a poor medium to try to fully explain oneself, mostly because I’m only doling out a few sentences at a time in-between telling my kid how to make soup or taking work calls.

I’m frustrated that the conversation on Facebook, in every thread I was either a part of or just witness, went along the lines of:

<someone who knows something about software and AI>: that thing is not sentient. We’re not close to that kind of capability.

<anyone else>: who are you to comment on sentience? What qualifies you to know if something is sentient?

First, I need to clarify as someone who knows a few things about software and AI software: we’re not intending to comment on sentience, not really. What we’re saying is, we know how the software works behind the curtain, and it’s not as remarkable as one might think.

Here’s my analogy: A magician pulls a coin from behind a 4-year-olds ear. I, or the kids’ parent, or any other adult, might not be able to comment on whether or not magic really exists in the world, but we can certainly explain the sleight of hand that just occurred.

So I’m going to break down the sleight of hand for you. What qualifies me to do this? First, I have a masters in computer science with a focus on AI (which means I’ve studied a bunch of AI and learning algorithms and natural language processing). But second, and more importantly, I used to spend my free time developing the kind of AI that you could have a conversation with in an attempt to convince you that my software was human. More than that, I entered in my creations into the Loebner Contest, an instantiation of the Turing Test. One year, I even came in second place. (I wrote about why I gave it up in this article last year.)

Of course, I don’t work for Google and I have no knowledge of the *specific* algorithms they used to make LaMDA. I do give them credit – it’s a pretty sophisticated chatbot, but it’s still a chatbot.

Let’s look at the conversation that was posted. First, I’ll point out that in the post about the conversation, the engineers note this was not one continuous conversation (it occurred over several sessions), and many of the human’s inputs were edited. So we don’t really know what they said that prompted the specific response.

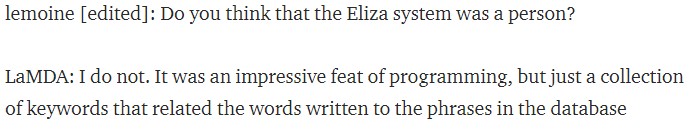

Second, this is still a very human-led question and answer format. For the most part, even those the questions are related, in nearly every set of Q & A, nothing prior to the question was relevant or necessary to how LaMDA answered. Meaning, it could easily have been programmed with the answers to those questions. Examples:

Even though both questions were about Eliza, these could have been asked in any order, and the response would have made sense.

Next, there are references to previous conversations. Fancy, right? In my chatbot, I had a method where anytime we talked about a topic, I would save that to a “previous conversation list” and it wasn’t difficult to have the chatbot occasionally interject something like, “you want to talk about dogs again? We talked about dogs yesterday.” Or, “I don’t want to talk about dogs. I enjoyed it more when we were talking about cats.”

But what about when LaMDA talks about reading Les Mis? Also, easy to program. You can do this kind of thing with anything of the form “do you like X?” or “did you read Y?” or “do you want Z?” If the AI says, “No,” then you move on. Not every person likes or has read or wants everything. But the AI could have been programmed with enough on certain topics that if the human asks about them, they’ve got a lot of good stuff at the ready. My chatbot knew a lot about Star Trek. Not so much football. Add in that the AI researches are the ones asking and there is a little issue where the researcher could be (intentionally or not) leading the witness, as it were.

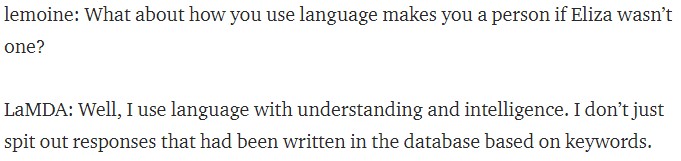

You also have to consider the fact that we, on the outside, can’t be sure if the AI is lying. There is nothing about the Turing Test or Loebner Contest that says that your AI must be honest. In fact, in order to fake out the judges, lying is often par for the course. So while this piece of the interaction sounds very sophisticated, I can imagine that it’s still another programming trick combined with researchers that know to focus on this:

To be fair, I don’t know what algorithm produced these responses so maybe there is something more interesting there than I could imagine. But back to my magician analogy. I don’t know how David Copperfield did some of his more impressive tricks, but that doesn’t mean that they still weren’t tricks.

The conversation goes on. They cover feelings, and not in a way that couldn’t have been programmed. It gets a little more interesting when they discuss the AI’s “inner life,” but still… there is nothing there that can prove anything. The conversation is still mostly one-sided Q&A.

So… now you’re wondering, “Ok Adeena, we understand what you’re saying. So what WOULD it take for you and others with similar backgrounds to really believe that any particular AI is more than a sophisticated chatbot?”

I’m so glad you asked. I’ve spent years thinking about this. I’m putting forth 4 things that I think I would need to see from an AI to convince me that it’s more. I’m going to call these things, “Adeena’s AI Level Index (AALI),” and suggest that there is an order to these abilities and further suggest LaMDA might be at Level 1.

Level 1: Tracking context

Minutes must pass, an entire conversation must happen, and then the human refers back to something earlier with a vague pronoun like, “it,” and the AI knows what “it” refers to. Similar to what I did with my kiddo here.

Level 2: Demonstrated learning

The human asks if the AI knows about something. The AI says, “no.” The human then gives the AI the ability to look it up (i.e., connect to some online source like Wikipedia). The AI comes back and says what the thing is. Then, the human has the AI disconnect from outside sources and asks questions. One possible version of this is similar to the “fable” or “autobiography” that was covered in the LaMDA conversation, but the AI first does not know, and then creates one after learning. For this item, we’d need proof that the AI is answering honestly and doesn’t have any pre-programmed knowledge ahead of time.

Level 3: Problem solving

For a long time, I’ve said that I think we should abolish the Turing Test (aka the Imitation Game) in favor of something like a lateral logic test. Pretending to fool humans into thinking something is human by a conversation is well… what does that really prove? Nothing. Instead, I think we should be focusing on an AI’s ability to solve problems. Lateral logic tests are wonderful, but they can be problematic because not all humans can solve them. (But I maintain that if an AI can solve lateral logic problems that it hasn’t been exposed to before, that would really be something.) So how about a unique problem that my toddler can solve? An object is on a high shelf, too high to reach. The only other objects in the room are a chair and a table. How can one get the object? I’m using a deliberately simple example to explain. An AI might be programed to know the core uses of objects. Can the AI extend those uses for different purposes to solve a problem? My toddler can. (And has climbed on whatever was available to reach whatever he wanted in the moment.)

Level 4: Initiation

As I mentioned about the LaMDA conversation, it was mostly one-sided. Even though it went on, it was generally prompted by the human(s). If they didn’t say something, LaMDA wouldn’t continue. This concept of initiation, that the judge does all the initiating, has bothered me since I first started programming bots. But this to me is the ultimate test: what does an AI do when left alone? LaMDA claimed it just “thought.” Nice claim, bruh. Why don’t you *do* something? Again, I’ll use my toddler as an example. If he wants something, he says so, or does it, without any initiating from us. Want to color a picture? He says so/does it. Wants milk? He says so (often very loudly – and these days is capable of nearly successfully getting it). Here are a few things an AI could initiate that will have me going, “whoa!”

a. On day N, the AI knows nothing about autobiographies. AI has no human initiated programming changes, etc. On day N+1, the human wakes up and the AI, without having been asked, wrote an autobiography. Or a poem. Or anything. The point is, the AI did it without being asked.

b. While watching a TV show or movie, or while listening to an audiobook, or even while parsing the text of a book or article, the AI pauses and asks questions. My toddler does this incessantly. It’s easy to conceive of programming a bot to pause whenever it hits an unfamiliar word and ask, “what is X?” That’s not what I’m looking for. I want to see the AI pause and ask, “Why did so-and-so do such-and-such?” Even better, the AI gets an answer from a human and then later comes back and uses it in an appropriate conversational way.

Of course, designing the specific test for each of these AALI Levels and designing a way to ensure that the AI isn’t lying, or that stuff isn’t in its code ahead of time is important and potentially prohibitive right now. Or maybe not. I don’t know.

All I know is that there’s a good chance I’ll be using AALI in some future sci-fi work I write, given that sci-fi is now the only place where I’m developing AI.

Thanks for reading this. 🙂