“Computer, extrapolate…”

Spock, “Wolf in the Fold”

“Correlate following hypothesis.”

Kirk, “Mirror, Mirror”

“Computer, analyze and reply… …Your recommendation?”

Spock, “Wink of an Eye”

These quotes are lines said by Kirk or Spock to the Enterprise computer. Besides a vision of an egalitarian, peaceful, benevolent future, apparently Gene Roddenberry also envisioned one where we would fly around in spaceships with ridiculously advanced AI.

And it was a good thing. The Enterprise computer was there as a tool to help Kirk and his crew solve problems every week. It wasn’t there to replace anybody (well, except in that one episode, “The Ultimate Computer,” where that was the exact plot of the episode)—but act as a tool, the same way any other technology is handled. Technology are tools we use.

There were several episodes in the original Trek where Kirk (or Spock) made use of the computer as a powerhouse of a problem-solving tool. Technology doesn’t appear overnight. Technology evolves as our abilities and scientific knowledge evolves. So, it has to start somewhere.

That somewhere might be the technology of today. Siri, Alexa, Google Assistant, and now tools like ChatGPT are likely the precursors to what we see on the Enterprise. And folks should be excited, not freaked out. Especially if we accept that, in a bright future like Star Trek, these tools are helpful, not harmful.

And there’s no need to freak out that these tools are taking over… they’re nowhere near as advanced as what we see with the capabilities of the Enterprise. (Note: What’s interesting is that in TNG, the Enterprise computer, while we adore that the lovely Majel Barrett Roddenberry voiced it, wasn’t nearly as sophisticated as its predecessor! They seemed to replace that capability with Data, a truly advanced AI. The Enterprise-D computer only provided factual data, responded to commands, etc. The crew rarely asked it to extrapolate or speculate. The one memorable time it did, it wasn’t even the real computer in the real universe, but in the “bubble” universe—I don’t even know what to call it—that had Dr. Crusher trapped in the episode “Remember Me.”)

Try asking Alexa or Siri to “extrapolate” or “analyze.” I asked Alexa, “Alexa, can you extrapolate?” and she responded by giving me the definition of the word and following up with product recommendations. She didn’t even give me a straightforward answer to a straightforward yes/no question.

Don’t get me wrong. I love Alexa (and Google Assistant). They’re super handy when I need to be hands free at home and set a timer in the kitchen, or play music, or find out the weather outside. But they’re not AI. Alexa even has some fun pre-canned answers to things like, “Who is your father?” or “Beam me up!” Over the years, it’s clear that Natural Language Processing capabilities have improved across the board.

If you’re not familiar with the concept, Natural Language Processing (or NLP) is a branch of computer science that deals with understanding human language (spoken or written). I’ve written and spoken about the challenges of NLP several times before, particularly when it comes to keeping an understanding of the context going through a conversation.

We humans do this quite naturally… we understand more than just the spoken or written words. If I had asked my simple yes/no question, “Can you extrapolate?” to a person, it’s possible that they would have answered yes or no and left it there. But it’s more likely they would have either taken clues from any prior interactions we’d had, and/or the tone of my voice, and/or other knowledge about me or why I might be asking to provide more than just a “yes” or “no” in response.

When I asked Alexa this question, I expected her to respond with a definitive no… and then maybe add some extra information like the definition, or ask a follow-up question.

In this case, Alexa clearly failed because it didn’t directly answer my question at all. Although indirectly it did by not answering it. <sigh> If I had wanted a definition of “extrapolate” I would have asked for that instead.

Alexa, Google Assistant, and the like (I don’t really every talk about Siri much because I don’t have one-on-one experience with Siri. I’m Android and PC, not Mac/Apple)–while they fall under the modern day discipline of AI in Computer Science– truly don’t live up to the “I” in AI. Not in the way we’ve seen AI in science fiction, anyway.

In order to really live up to the “I,” that technology is going to need to reason, to extrapolate, and to analyze like humans can. Like the Enterprise 1701 could.

Enter ChatGPT and brand-new freak-outs.

ChatGPT is new and shiny and… very interesting. Everyone has been freaking out about it and upcoming competitors. I like to reference this xkcd comic when people freak out: https://xkcd.com/1289/

Now that we’re done freaking out, the question is: have we now taken some steps to get to the level of AI of the Enterprise?

Yes. It’s better.

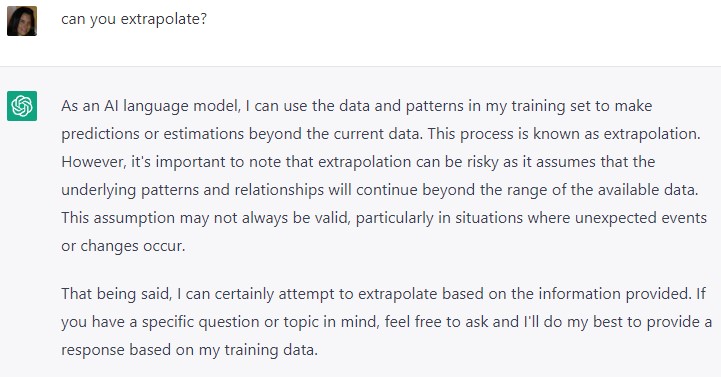

I asked ChatGPT if it could extrapolate. Here’s what it told me:

That’s a pretty cool answer. It’s non-committal. It basically said it would try to provide an answer, but don’t necessarily trust the answer.

I then asked it, “Can you correlate a hypothesis?” because that was something the Enterprise computer could do. I’m not posting the screenshot because it was very long and boiled down to: “No, because all I am is an AI language model. But here’s how you can do it.” (and then it listed out the steps on how-to do this)

So since it said it could try to extrapolate, I poked at that some more.

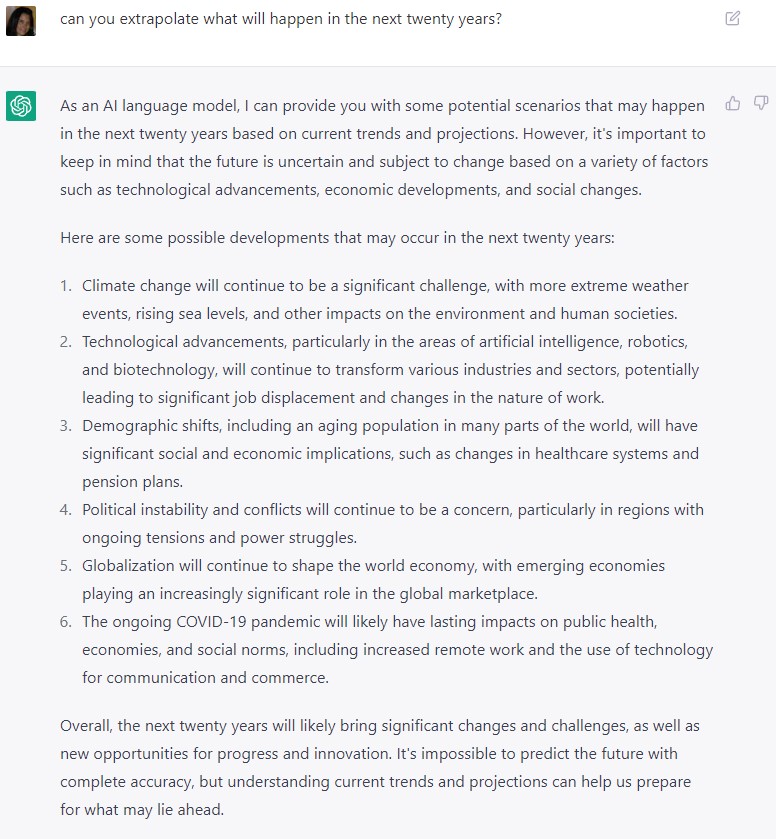

I asked it: “can you extrapolate what will happen in the next twenty years?”

This answer initially sounded pretty good… until I followed up with “what about the next 100 years?” and it gave me nearly an identical answer and made me realize that the original answer wasn’t insightful in the least.

ChatGPT isn’t replacing the deep thinkers anytime soon. But I am impressed with ChatGPT’s responses and it gives deeper appreciation for what the Enterprise computer can do.

The Enterprise computer:

- understands language, possibly the subtleties

- has access to a large store of information

- can relate that information

- has the ability to extrapolate, make conclusions, predictions, etc

The first two items on that list are what we see with something like ChatGPT. ChatGPT even does some of item 3. But item 4 still needs work in the real world. If you ask ChatGPT if it can make predictions, similar to when I asked to about extrapolation, it hedged its answer and said that any prediction it might make depends on the accuracy of its training data.

What’s not on that list, and where the next level of advanced AI comes in, is the ability to solve problems. Data fits that level of AI.

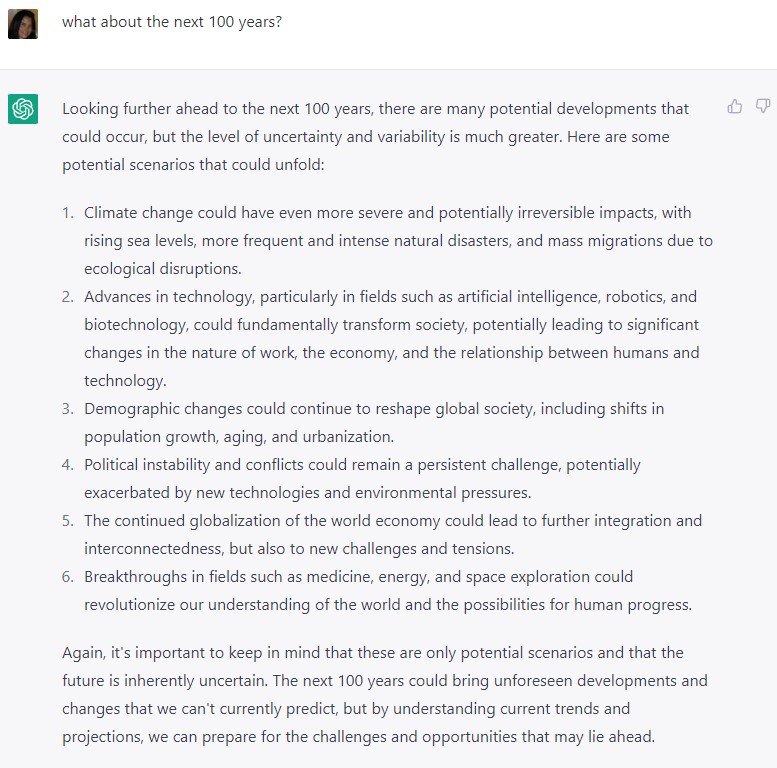

All that said… I’m loving ChatGPT as a brainstorming buddy. For example, before this tool came into our lives, while writing, I would often need to look for synonyms for words on Google. Like what if I was looking for not only a synonym for ‘computer,’ but a humorous synonym for ‘computer.’ Asking Google yields this:

I get synonyms, but not necessarily humorous ones. I get other links to other humor-related sites. I would need to click on each and see if there are any nuggets or anything that sparked an idea. Essentially, Google granted me synonyms for “computer” and completely ignored my request for “humorous” ones.

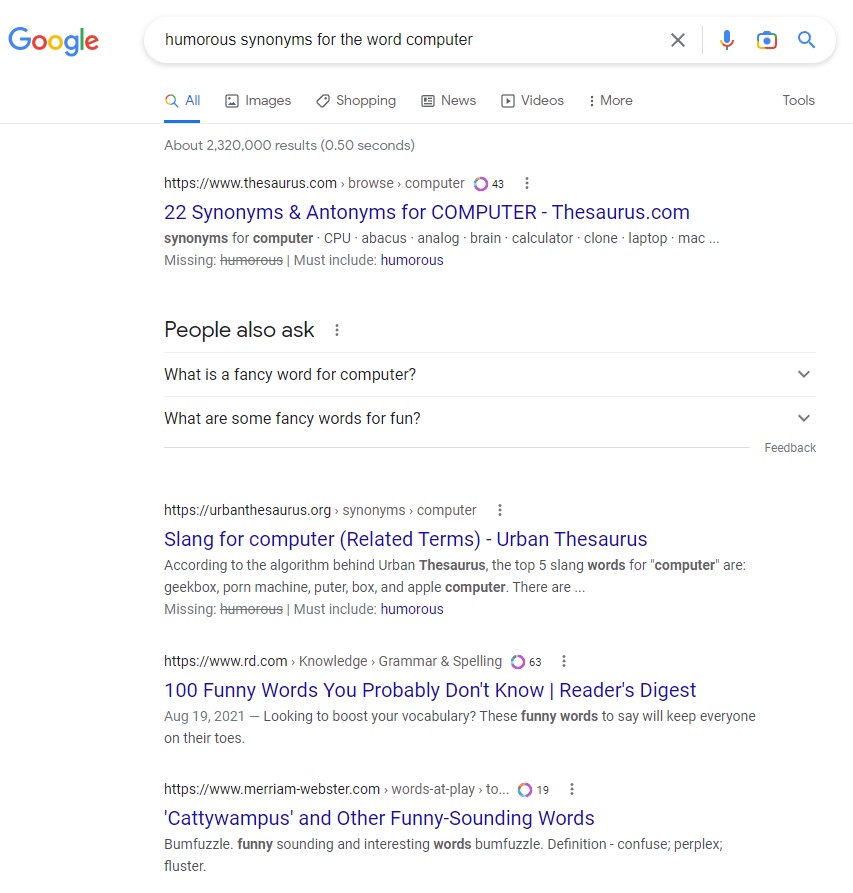

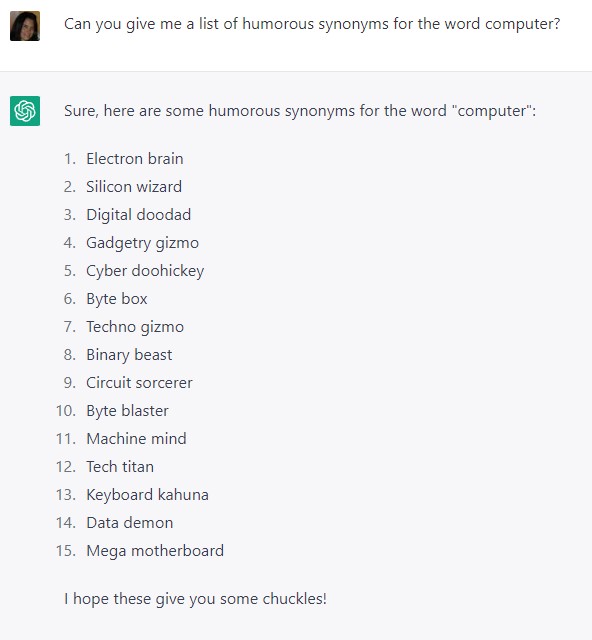

But when I ask ChatGPT, I get the kind of answer a research assistant might give me:

There’s a Facebook group for SFF writers that I’m active in. The kind of results that I just received from ChatGPT are similar to what I would get if I posted the same question there, minus the completely snarky and often unhelpful comments to a request like that (e.g., “what’s wrong with the word computer?” or “what do you mean by humorous (crazy-hilarious, or witty or…?)” or “Try googling it.” or “I’ve never heard of a humorous computer” and so on). In other words, it’s similar to brainstorming with people, minus some of the time-wasting unhelpful bits.

To summarize…

I’m thankful that we’re making progress and I can envision a time in the future where we’ve integrated tools such as Alexa, Watson, ChatGPT, and others to make our increasingly complex lives more manageable. From writing tasks, to medical diagnosis, to engineering, to navigating starships across the galaxy and encountering new problems, these technologies can help us move closer to achieving our goals, individual or collectively.

Related posts:

> My Unexpected Realization When I Tried to Create Artificial Intelligence

> Behind the Curtain (aka Calm down… the Google AI isn’t sentient. Yet.)